Seoul National University

Seoul National University

Seoul National University

Overview Video

CVPR Technical Talk Video

- KeywordsNeRF, Egocentric video, Large-scale

- PaperPDF Link

- DatasetGoogle Drive Link

- CodeGitHub Link

- VideoVideo Link

Abstract

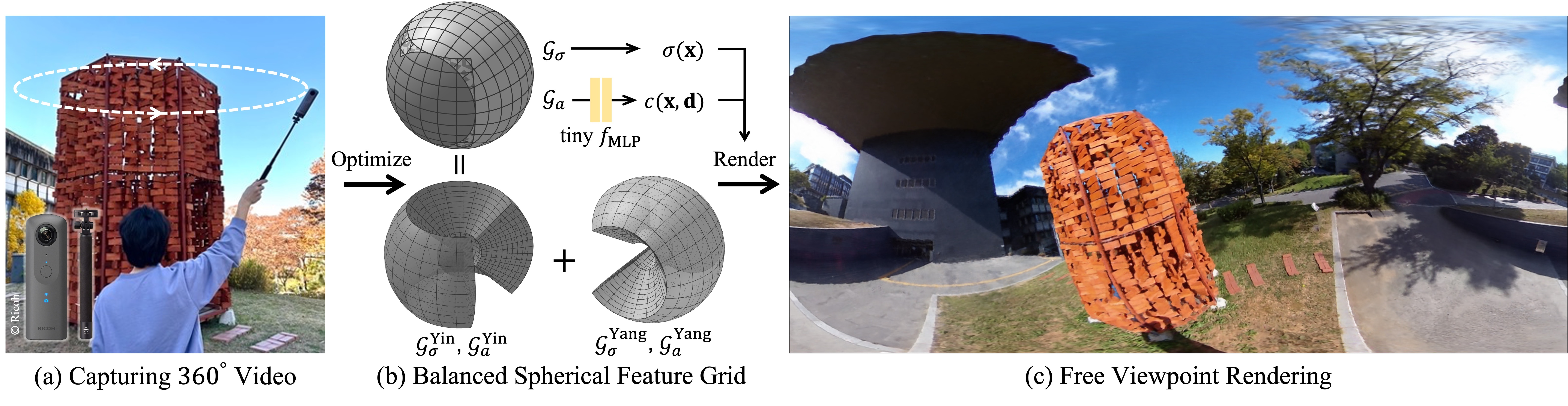

We present EgoNeRF, a practical solution to reconstruct large-scale real-world environments for VR assets. Given a few seconds of casually captured 360 video, EgoNeRF can efficiently build neural radiance fields. Motivated by the recent acceleration of NeRF using feature grids, we adopt spherical coordinate instead of conventional Cartesian coordinate. Cartesian feature grid is inefficient to represent large-scale unbounded scenes because it has a spatially uniform resolution, regardless of distance from viewers. The spherical parameterization better aligns with the rays of egocentric images, and yet enables factorization for performance enhancement. However, the naïve spherical grid suffers from singularities at two poles, and also cannot represent unbounded scenes. To avoid singularities near poles, we combine two balanced grids, which results in a quasi-uniform angular grid. We also partition the radial grid exponentially and place an environment map at infinity to represent unbounded scenes. Furthermore, with our resampling technique for grid-based methods, we can increase the number of valid samples to train NeRF volume. We extensively evaluate our method in our newly introduced synthetic and real-world egocentric 360 video datasets, and it consistently achieves state-of-the-art performance.

Dataset Overview

EgoNeRF takes a casual "Egocentric Video" as input. We demonstrate some samples from our synthetic OmniBlender dataset and real-world Ricoh360 dataset. By simply rotating the camera attached to the stick, we can capture a large environment around the viewer. These videos are low-resolution version of our original dataset. You can download the dataset from the link above.

OmniBlender

Ricoh360

Qualitative Results

Free-view Rendered Video

Comparison with Baselines (Hover your mouse over the photo to zoom in!)

- Bistro Bike scene in OmniBlender dataset

Ground Truth

Mip-NeRF 360

NeRF

DVGO

TensoRF

EgoNeRF (Ours)

Ground Truth

Mip-NeRF 360

NeRF

DVGO

TensoRF

EgoNeRF (Ours)

Citation

@inproceedings{Choi_2023_CVPR,

author = {Choi, Changwoon and Kim, Sang Min and Kim, Young Min},

title = {Balanced Spherical Grid for Egocentric View Synthesis},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2023},

pages = {16590-16599}

}